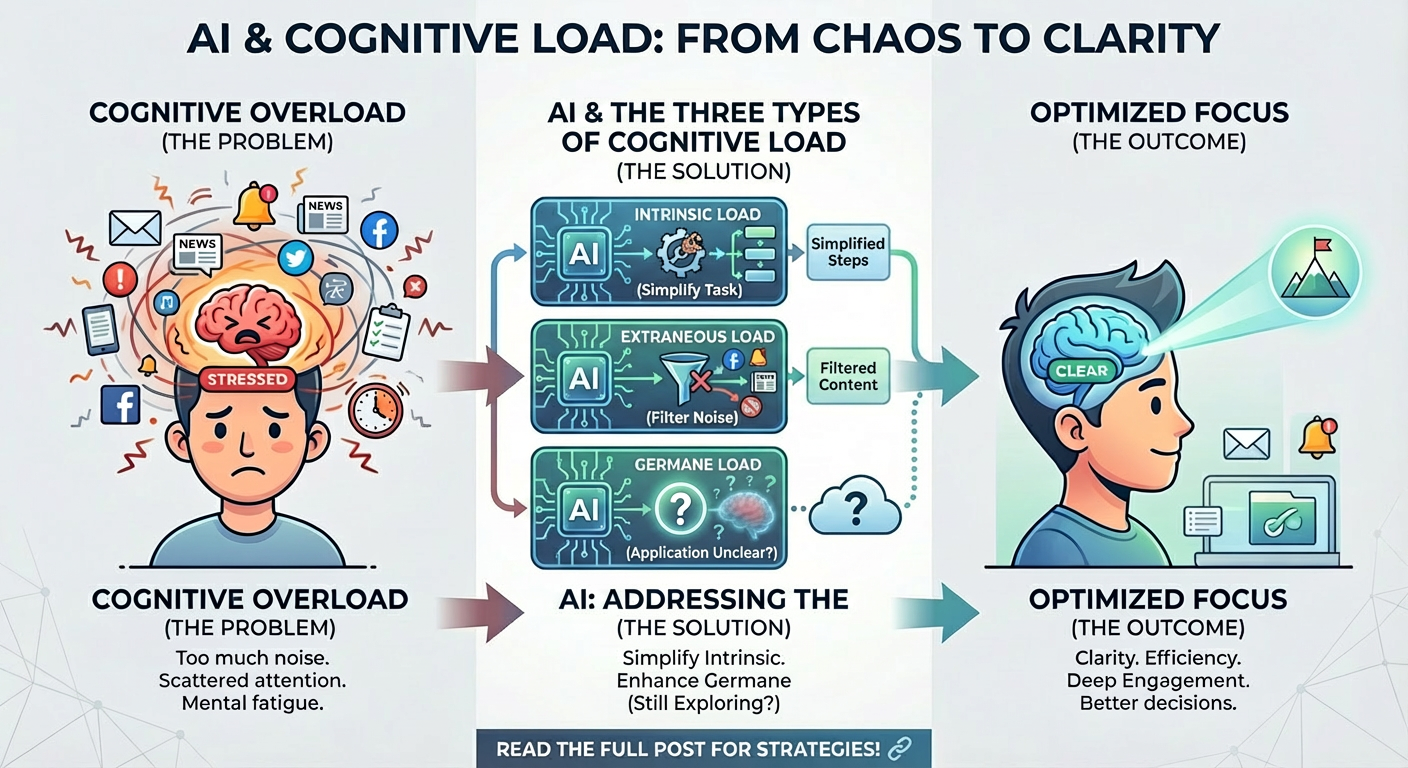

Where is our current focus on applying AI to optimise our cognitive load?

Let’s start with a simplified explanation of cognitive load:

Cognitive load refers to the amount of mental effort being used in working memory, or put simply, how much your brain is juggling right now.

Since working memory has limited capacity, it is important to ensure it is used on the most value-creating tasks.

The three types of cognitive loads are:

- Extranouse: This type of load is a waste of time and considered toil. The goal should be to eliminate this type of cognitive load. Remembering a command-line to build and test your application for the 1000th time, attending low-value meetings, preparing weekly status updates, manually testing the same flow daily before each deploy, or dealing with unintuitive dashboards or tools are typical examples of this type of load.

- Intrinsic: this type of cognitive load is inherent in the task’s complexity. It cannot be eliminated, but it can be managed. For an engineering manager, this could include making impactful architectural decisions with incomplete information, making leadership trade-offs regarding team autonomy, building team alignment, and understanding the technical architecture of a distributed system. Some of these could be inherently simple or complex.

- Germaine: This is where the value is created, and you want to spend most of your time here. This is where intuition, customer intimacy, and understanding the “why” and the problem space come into play. Our brain processes new information and integrates it with what we already know to make judgments and decisions. Germaine’s cognitive load tends to take time, requires long-term experience, and cannot be fast-tracked substantially.

Where AI helps (today):

As of today, it is clear that AI shines in the extraneous space. It’s effective at automating mundane tasks and reducing the need to expend human cognitive capacity on them. Aggregating multiple sources of truth and building a single dashboard, summarising meetings, or abstracting unintuitive legacy interfaces into English using agentic applications are good examples of that.

As for intrinsic cognitive load, we cannot make a complex system less complex. However, we can utilise AI to fast-track the learner’s prior knowledge acquisition (e.g., AI tutoring or pairing), or help the learner decouple a complex system into its components and break down a complex problem into bounded chunks (e.g., by partitioning the domain space into subdomains with smaller context).

When it comes to germane cognitive load, AI has made little headway. The reason is that in this space, we are dealing with hard problems and have less understanding of how the brain, cognition, and metacognition work. While AI shows promise in understanding existing institutional knowledge, this space is about something more: actively building a mental framework for integration, sense-making, and connecting existing domain knowledge to new ones, and cultivating understanding and wisdom over time.

My questions and hypotheses are:

Most of the new value creation happens with germane cognitive capacity. The current architecture of LLMs is not capable of operating in this space (or they are faking it at best), and perhaps world models are one way researchers are trying to tackle it. The question is whether a model trained at a specific point in time, with no active memory, can achieve this level of cognition.

The most significant impact an AI can have in this space is to eliminate and manage humans’ cognitive load for extraneous and intrinsic tasks. This means we will have more capacity to spend our brain cycles on germane tasks. That is why some argue that critical and systems thinking, along with creativity, are the competitive advantages of the next generation of workers.

While there are numerous definitions of AGI and ASI, one might argue that if AI can operate effectively in the germane space, we have reached that milestone, since it means humans have lost their last competitive advantage.